Simulations & Reinforcement Learning

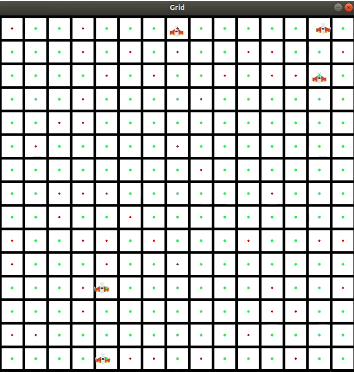

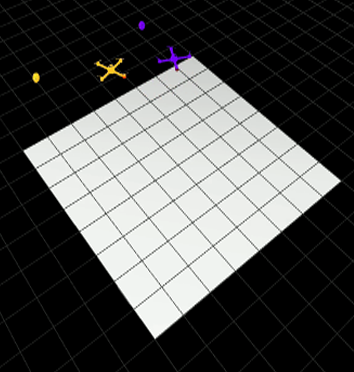

While traversing the environment, the drones might have to map certain locations (cells/rasters) for a longer period of time for collecting multiple samples at that location due to the increased damage or noise in measurements, we call these locations with this type of uncertainty as anomalous. We used open-source tools to build a 2D and 3D simulation where the drones pick amongst actions to cover a grid optimally. The major difference between the 2D and 3D simulation is the number of degrees of freedom the drones have. In the 3D simulation, the drones have 6 degrees of freedom whereas in the 2D simulation the drones only have 3 degrees of freedom. The difference in degrees of freedom increases the complexity of decisions that the drones have to make at each time step. Screenshots from the simulation are shown below. In order to start from a less complex task (lesser degrees of freedom) for the reinforcement learning algorithm to learn, we train and evaluate our agents on the 2D simulation to begin with.

The cells to be mapped by the drones are represented as grids. In the 2D simulation, the red dots represent anomalous cells and the drones require multiple passes/samples to reduce its uncertainty. Once all the cells are mapped by the drone swarm, the simulation terminates. The agent gets a reward every time it successfully maps a cell and a relatively higher reward when it maps an anomalous cell. There is a negative reward, i.e a penalty that exponentially increases based on distance to the unmapped anomalous cells.

We used Deep Q-Learning algorithm for training the agent which controls the drones. The agent has a Multi-Layer Perceptron (MLP) as a policy for each of the drone. These MLPs map a sparse representation of the input state to an action for each of the drone at a time-step. During training, the agent randomly explores the environment and collects information and stores them in a buffer which is then later sampled from at intervals and used to train the MLP. After training the agent for certain number of steps, we observe that the algorithm struggles to pick the perceived best action and the drone moves to one side of the environment, i.e it always picks the same action every time-step. We describe this as a form of action collapse. To possibly overcome this problem, we used actor-critic methods with policy gradients to update the actor and critic network. The agent is trained using Advantage Actor-Critic (A2C) algorithm. Both Actor and Critic are Multi-Layer Perceptron network with memory state implemented using Gated Recurrent Unit (GRU). The agent provides a sample of probabilities over the set of discrete actions and we sample from that set for the agent to act. We observe that when we decrease the number of total steps the agent is trained, we are able to avoid the problem of action collapse. We hypothesize that this could be because as the number of steps increases, the drones memorize the most frequent action and repeats the same during the test time which is comparable to the problem of overfitting in machine learning.

An example of 3D simulation. (The code for the 3D simulation is taken and modified from https://github.com/sgowal/swarmsim)